Projects

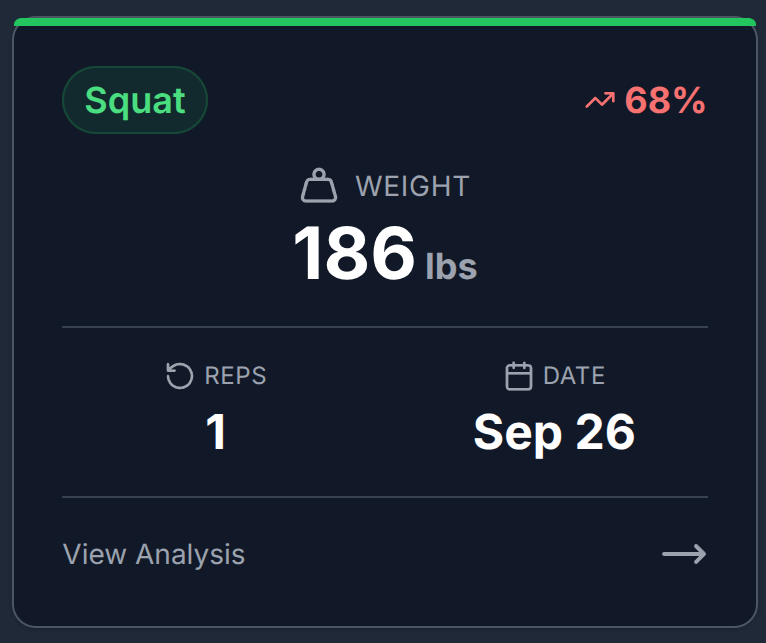

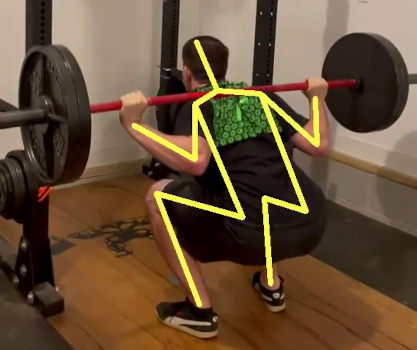

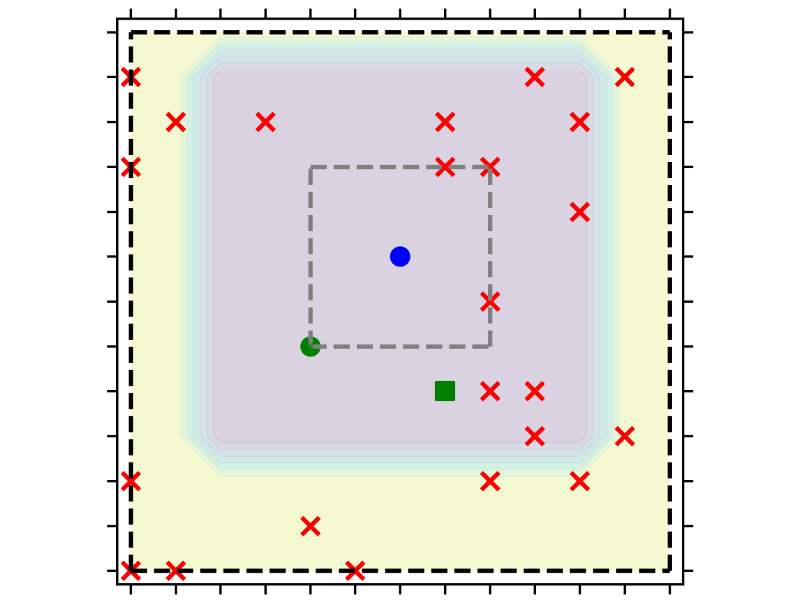

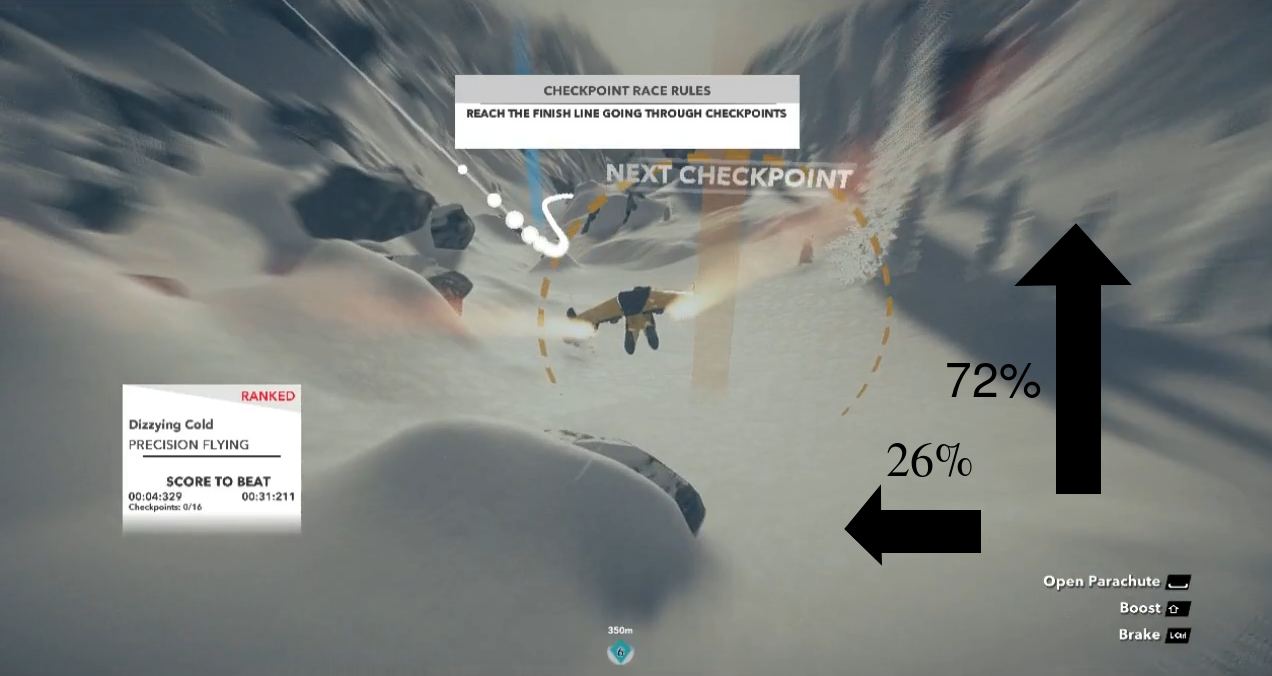

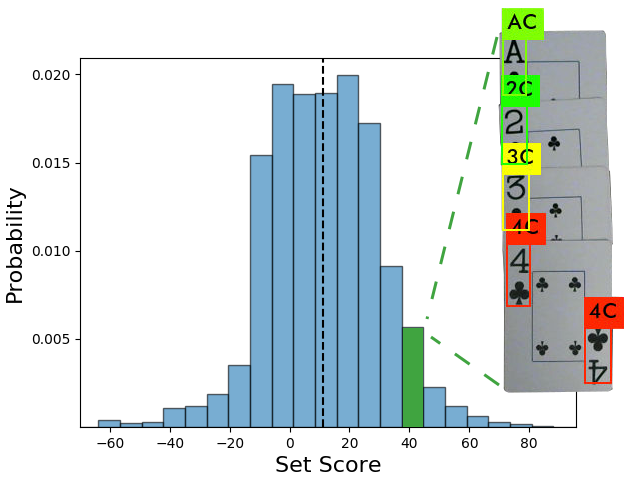

I am a huge proponent of project-based learning and am always working on something. I started working through projects of interest back in graduate school to solidify my knowledge and application of machine learning. These early projects were largely inspired by playing board games with friends. More recent projects, also inspired by my outside hobbies, have focused on the application of computer vision models to judging powerlifting form.